Is there any real difference between HE laundry detergent and regular?

Doing laundry isn’t as simple as it once was. For example, High Efficiency (HE) detergent is specially-made for washing machines that use less water. So what’s the difference between HE laundry detergent and regular? Are they pretty much the same?

Can you make rooms look bigger with paint? Yes – and here’s how

You can make your rooms look bigger with creative color-based optical illusions that can be used in almost any space. Here’s how to make rooms look bigger with paint!

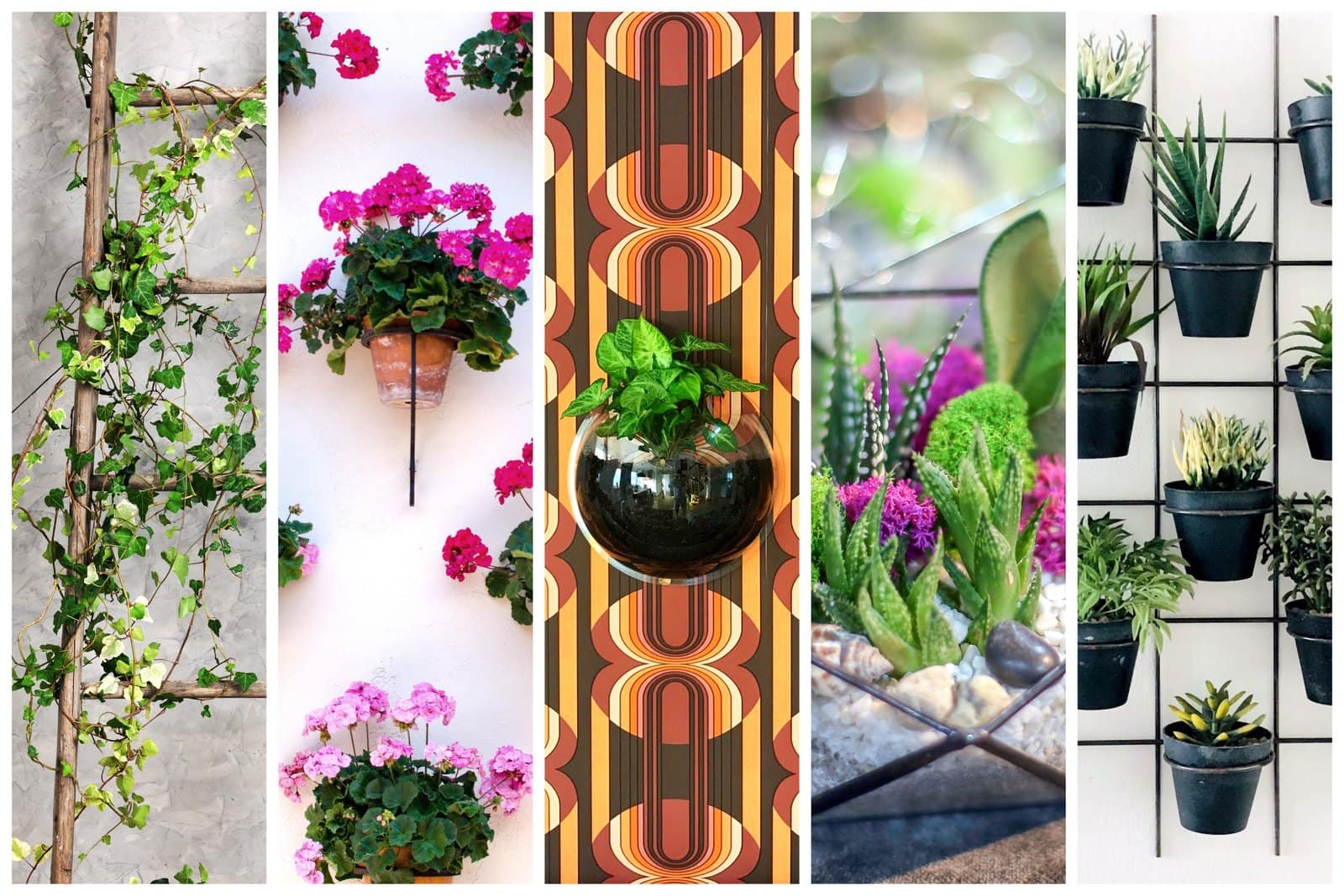

30 creative indoor plant decorating ideas (and none of them are wreaths!)

Want to get a little more green in your home? Maybe one of these creative indoor plant decorating ideas will inspire your next decor project.

Holiday craft basics: Making dried oranges & apples

Dried oranges and apples are easy, crafty country accents that you can add to wreaths, swags, baskets, or any craft using dried natural items.

Is it bad to touch receipts? Here’s why it might be

Sometimes, even the simplest little things can actually be a health hazard. It turns out those long thermal cash register receipts are full of stuff you probably don’t want to touch.

How to make hard boiled eggs 2 fast & easy ways: Instant Pot or stove top

If your eggs cooked in the shell have turned out to be less than ideal, here are some easy tips for how to make hard boiled eggs — either in the Instant Pot or on the stove.